Gaussian XOR and Gaussian XNOR Experiment¶

One key goal of synergistic learning is to be able to continually improve upon past performance with the introduction of new data, without forgetting too much of the past tasks. This transfer of information can be evaluated using a variety of metrics; however, here, we use a generalization of Pearl’s transfer-benefit ratio (TBR) in both the forward and backward directions.

As described in Vogelstein, et al. (2020), the forward transfer efficiency of task

If

Similarly, the backward transfer efficiency of task

If

Synergistic learning in a simple environment can therefore be demonstrated using two simple tasks: Gaussian XOR and Gaussian Not-XOR (XNOR). Here, forward transfer efficiency is the ratio of generalization errors for XNOR, whereas backward transfer efficiency is the ratio of generalization errors for XOR. These two tasks share the same discriminant boundaries, so learning can be easily transferred between them.

This experiment compares the performance of synergistic forests to uncertainty forests in undergoing these tasks.

[1]:

import numpy as np

import functions.xor_xnor_functions as fn

from proglearn.sims import generate_gaussian_parity

Note: This notebook tutorial uses functions stored externally within functions/xor_xnor_functions.py, to simplify presentation of code. These functions are imported above, along with other libraries.

Classification Problem¶

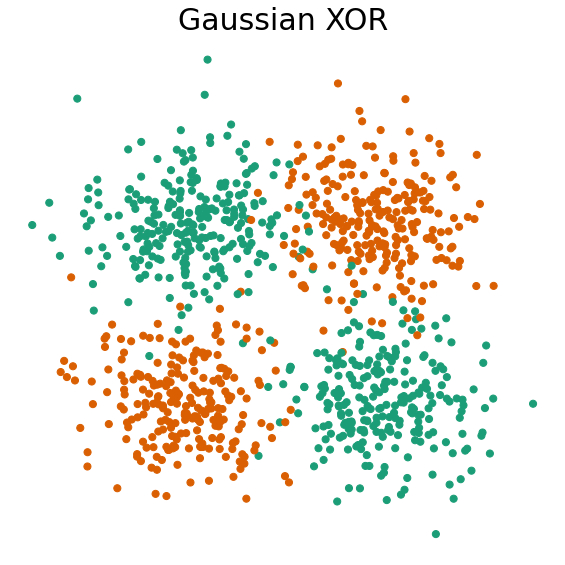

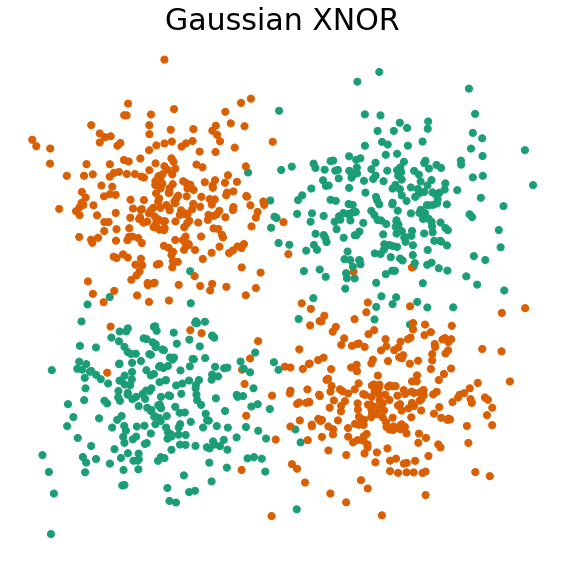

First, let’s visualize Gaussian XOR and N-XOR.

Gaussian XOR is a two-class classification problem, where… - Class 0 is drawn from two Gaussians with

Gaussian XNOR has the same distribution as Gaussian XOR, but with the class labels flipped, i.e… - Class 0 is drawn from two Gaussians with

Within the proglearn package, we can make use of the simulations within the sims folder to generate simulated data. The generate_gaussian_parity function within gaussian_sim.py can be used to create the Gaussian XOR and XNOR problems. Let’s generate data and plot it to see what these problems look like!

[2]:

# call function to return gaussian xor and xnor data:

X_xor, y_xor = generate_gaussian_parity(1000)

X_xnor, y_xnor = generate_gaussian_parity(1000, angle_params=np.pi / 2)

# plot and format:

fn.plot_xor_xnor(X_xor, y_xor, "Gaussian XOR")

fn.plot_xor_xnor(X_xnor, y_xnor, "Gaussian XNOR")

The Experiment¶

Now that we have generated the data, we can prepare to run the experiment. The function for running the experiment, experiment, can be found within functions/xor_xnor_functions.py.

We first declare the hyperparameters to be used for the experiment, which are as follows: - mc_rep: number of repetitions to run the progressive learning algorithm for - n_test: number of xor/xnor data points in the test set - n_trees: number of trees - n_xor: array containing number of xor data points fed to learner, ranges from 50 to 725 in increments of 25 - n_xnor: array containing number of xnor data points fed to learner, ranges from 50 to 750 in increments of 25

[3]:

# define hyperparameters:

mc_rep = 10

n_test = 1000

n_trees = 10

n_xor = (100 * np.arange(0.5, 7.50, step=0.25)).astype(int)

n_xnor = (100 * np.arange(0.25, 7.50, step=0.25)).astype(int)

Once those are determined, the experiment can be initialized and performed. We iterate over the values in n_xor and n_xnor sequentially, running each experiment for the number of iterations declared in mc_rep.

[4]:

# running the experiment:

# create empty arrays for storing results

mean_error = np.zeros((6, len(n_xor) + len(n_xnor)))

std_error = np.zeros((6, len(n_xor) + len(n_xnor)))

mean_te = np.zeros((4, len(n_xor) + len(n_xnor)))

std_te = np.zeros((4, len(n_xor) + len(n_xnor)))

# run the experiment

mean_error, std_error, mean_te, std_te = fn.run(

mc_rep, n_test, n_trees, n_xor, n_xnor, mean_error, std_error, mean_te, std_te

)

Great! The experiment should now be complete, with the results stored in four arrays: mean_error, std_error, mean_te, and std_te.

Visualizing the Results¶

Now that the experiment is complete, the results can be visualized by extracting the data from these arrays and plotting it.

Here, we again utilize functions from functions/xor_xnor_functions.py to help in plotting: - plot_error-and-eff: plots generalization error and transfer efficiency for progressive learning and random forests

[5]:

# plot data

%matplotlib inline

fn.plot_error_and_eff(n_xor, n_xnor, mean_error, mean_te, "XOR", "XNOR")

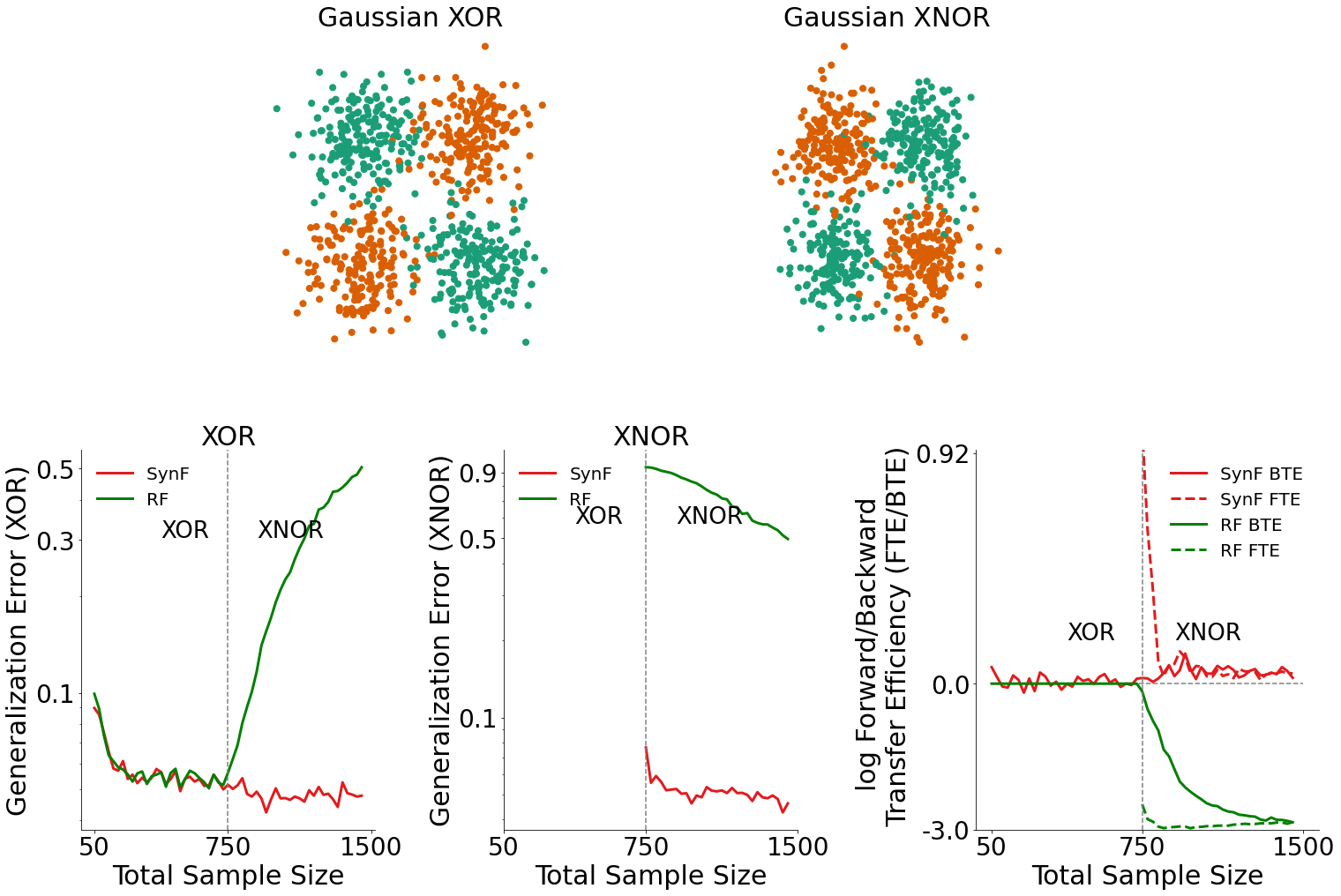

Generalization Error for XOR Data (bottom left plot)¶

By plotting the generalization error for XOR data, we can see how the introduction of XNOR data influenced the performance of both the synergistic forest (SynF) and the random forest.

In the bottom left plot, we see that when XNOR data is available, the SynF outperforms random forests.

Generalization Error for XNOR Data (bottom middle plot)¶

Similarly, by plotting the generalization error for XNOR data, we can also see how the presence of XOR data influenced the performance of both algorithms.

In the bottom middle plot, we see that given XOR data is available, SynF outperforms random forests on classifying XNOR data.

Transfer Efficiency for XOR Data (bottom right plot)¶

Given the generalization errors plotted above, we can calculate the transfer efficiency as a ratio of the generalization error for SynF to single task learning forest. The forward and backward transfer efficiencies are then plotted in the bottom right plot.

SynF demonstrates both positive forward and backward transfer in this environment