Gaussian XOR and Gaussian R-XOR BTE with CPD Experiment¶

One key goal of progressive learning is to be able to continually improve upon past performance with the introduction of new data, without forgetting too much of the past tasks. This transfer of information can be evaluated using a generalization of Pearl’s transfer-benefit ratio (TBR) to evaluate the backward direction.

As described in Vogelstein, et al. (2020), the backward transfer efficiency of task

If

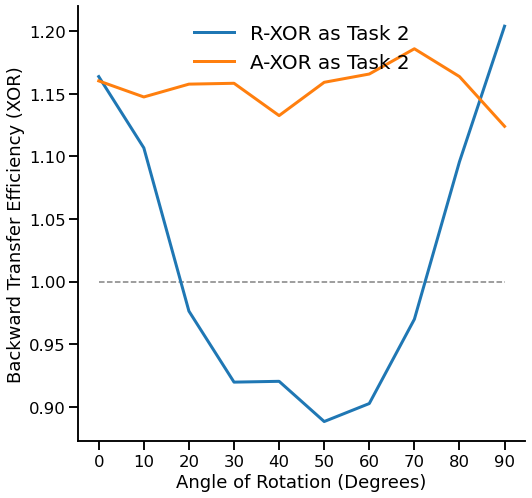

Progressive learning in a simple environment can therefore be demonstrated using two simple tasks: Gaussian XOR and Gaussian R-XOR. Here, backward transfer efficiency is the ratio of generalization errors for XOR. These two tasks share the same discriminant boundaries, so learning can be easily transferred between them. However, as we have seen in the original Guassian XOR and Gaussian R-XOR Experiment, backward transfer efficiency can suffer if the new task differs too much from past tasks.

In this experiment, we will try to mitigate the detriment of adding new tasks that are too different by adapting the domain of the new task to the domain of the old task, but maintaining the uniquely sampled distribution of the new task. To accomplish this, we will be using the Coherent Point Drift algorithm described in Myronenko & Song (2009) to adapt the new tasks to the old tasks through an affine registration.

[1]:

import numpy as np

import functions.xor_rxor_with_cpd_functions as fn

from proglearn.sims import generate_gaussian_parity

# Installing pycpd if not avaliable

try:

import pycpd

except:

!pip install pycpd

import pycpd

Using TensorFlow backend.

Note: This notebook tutorial uses functions stored externally within functions/xor_rxor_with_cpd_functions.py, to simplify presentation of code. These functions are imported above, along with other libraries.

Classification Problem with Domain Adaptation¶

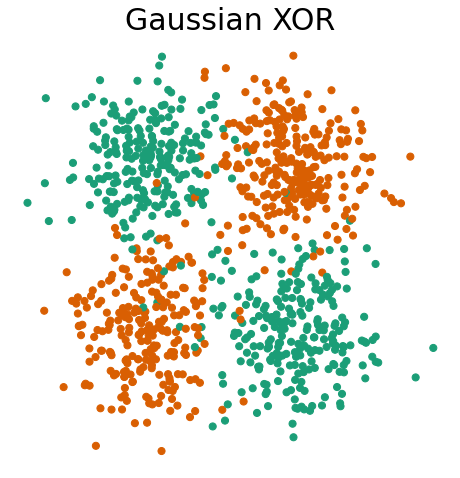

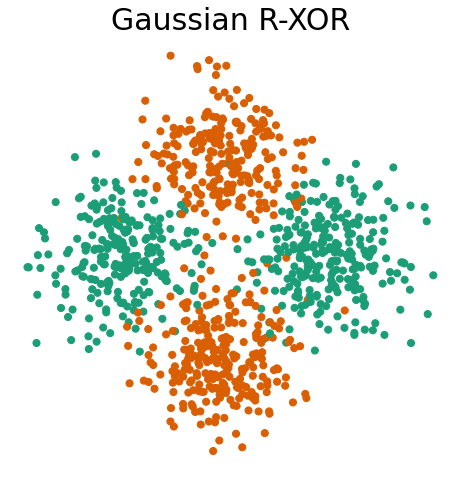

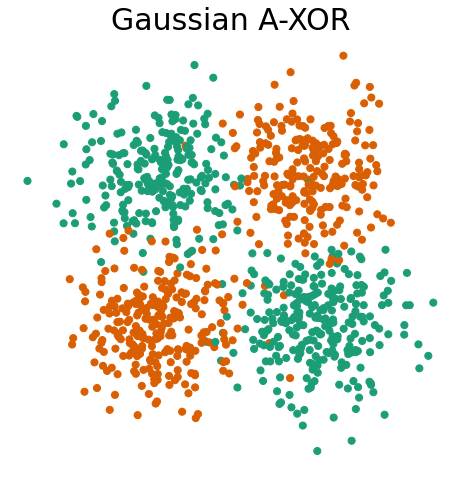

First, let’s visualize Gaussian XOR, Gaussian R-XOR, and Gaussian R-XOR adapted to XOR.

Gaussian XOR is a two-class classification problem, where… - Class 0 is drawn from two Gaussians with

Gaussian R-XOR has the same distribution as Gaussian XOR, but with the class labels at different degree angle

Gaussian R-XOR adapted to XOR will be an unrotated version of the above R-XOR version to match XOR. We will call this adapted XOR or A-XOR for short.

Within the proglearn package, we can make use of the simulations within the sims folder to generate simulated data. The generate_gaussian_parity function within gaussian_sim.py can be used to create the Gaussian XOR and R-XOR problems. Let’s generate data and plot it to see what these problems look like!

Additionaly, we will be using the cpd_reg function from functions/xor_rxor_with_cpd_functions.py to adapt our R-XOR to XOR, producing A-XOR. Observe that the distribution of points matches exatly with the generated R-XOR, except the angle is rotated to match XOR.

[2]:

# call function to return gaussian xor and r-xor data:

X_xor, y_xor = generate_gaussian_parity(1000)

X_rxor, y_rxor = generate_gaussian_parity(1000, angle_params=np.pi / 4)

# call function to adapt r-xor to xor:

X_axor, y_axor = fn.cpd_reg(X_rxor.copy(), X_xor.copy(), max_iter=50), y_rxor.copy()

# plot and format:

fn.plot_xor_rxor(X_xor, y_xor, "Gaussian XOR")

fn.plot_xor_rxor(X_rxor, y_rxor, "Gaussian R-XOR")

fn.plot_xor_rxor(X_axor, y_axor, "Gaussian A-XOR")

The Experiment (Various Angles vs BTE)¶

Now that we have generated the data, we can prepare to run the experiment. The function for running the experiment, experiment, can be found within functions/xor_rxor_with_cpd_functions.py.

In this experiment, we will be running two progressive learning classifiers. One classifier will be given a XOR task and a R-XOR task (adaptation off). One classifier will be given a XOR task and a R-XOR task adapted to the original XOR i.e. A-XOR (adaptation on). The backward transfer efficiency of both classifiers will be evaluated over a wide range of angles of the R-XOR task.

We first declare the hyperparameters to be used for the experiment, which are as follows: - mc_rep: number of repetitions to run the progressive learning algorithm for - angle_sweep: angles to test - task1_sample: number of task 1 samples - task2_sample: number of task 2 samples

[3]:

angle_sweep = range(0, 91, 10)

task1_sample = 100

task2_sample = 100

mc_rep = 500

Now, we’ll run the experiment.

First, we will run the classifier with adaptation off:

[4]:

mean_te1 = fn.bte_v_angle(

angle_sweep, task1_sample, task2_sample, mc_rep, adaptation=False

)

Next, we will run the classifier with adaptation on:

[5]:

mean_te2 = fn.bte_v_angle(

angle_sweep, task1_sample, task2_sample, mc_rep, adaptation=True

)

Lastly, plot the results:

[6]:

fn.plot_bte_v_angle(angle_sweep, mean_te1, mean_te2)